In AI Cubed, and the wider Digital, Data and Technology (DDaT) portfolio, we explore innovative ways to leverage AI for public sector innovation and digital sovereignty. Last week marked AI Appreciation Day, and it’s a timely moment to reflect on how our work in Department for Business and Trade (DBT) is helping shape a more transparent, efficient and trustworthy digital future.

AI Cubed represents our holistic approach of:

- AI Lab: for rapid experimentation

- AI Factory: to productise successful pilots

- AI Operations: to scale and grow AI solutions across DBT

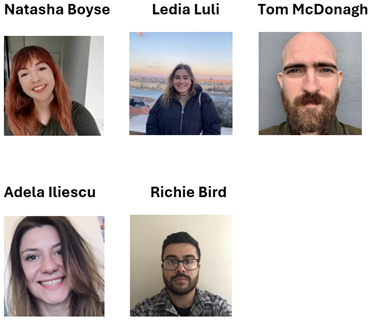

As part of this work, a team of 5 DBT data engineers and data scientists participated in a hackathon hosted by the French government. The goal of the hackathon was to integrate new AI tooling into pre-existing, open-source software developed by the French Government, known as La Suite numérique. We created a fact-checking tool, that we prototyped to a panel of judges.

The challenge of misinformation

Our choice of a hackathon project was focused around on the vital issue arising in today’s world - misinformation. It is a growing issue that affects public discourse, and trust in online content. Our team developed a Python package, FactVerifAi, as an AI-driven solution that integrates directly into document editors to fact check user’s statements.

What is FactVerifAI?

We created FactVerifAI to facilitate robust analysis and verification of statements using large language models (LLMs) and web search Application Programme Interfaces (APIs).

It's important to note that LLMs do not contain factual knowledge. Rather, they are trained on vast datasets to generate human-like responses based on patterns in the training data. As such, FactVerifAI does not directly provide facts, but assists in identifying potentially verifiable claims within text and retrieving relevant evidence from web sources to aid verification.

The primary workflow is:

- Extract - given an input text, FactVerifAI uses its extract claims function (see code) to identify fact-checkable claims based on the model's understanding.

- Retrieve - for each extracted claim, it queries web search APIs to retrieve relevant evidence.

- Score - evidence is scored by the LLM from 0% (no support evidence) to 100% (strongly backed by credible sources). In our scenario, we used Llama 3.1.

- Generate - a JavaScript Object Notation (JSON) report is generated containing the original claims, retrieved evidence, scores, and references to facilitate auditing and transparency.

The tool supports parallel processing for improved performance. It can integrate locally hosted LLMs via Ollama and OpenAI backends. It is designed to accommodate new data sources or domain/language-specific models, such as Albert which provides generative AI models to French government entities.

The key purpose is to augment and accelerate the fact-checking process, while ensuring users can scrutinise the underlying evidence sources and rationale.

Strengthening European digital infrastructure

Our prototype garnered positive feedback from participants and partners like DINUM and La Suite. They recognised its potential as a component of trustworthy European digital infrastructure aligned with shared goals like transparency, data protection, and interoperability.

Showcasing AI Cubed and Redbox

During our visit with the DBT France team, we showcased our AI assistant Redbox. We also discussed DBT's approach to the responsible integration of AI systems using the AI cubed model encouraging efficient delivery and transformation.

Redbox is our cutting-edge generative AI tool designed to help civil servants efficiently analyse, summarise, and extract insights from unstructured data sources like documents, websites, and databases. It also features the capability to leverage multi-agent tool invocation, enabling more complex and parallel processing tasks.

The DBT France team were impressed by the tool’s ability to quickly distil complex information into actionable insights. They highlighted Redbox’s potential to streamline research, analysis, and briefing processes for policy teams, particularly for cross-border policy work.

The tool’s multilingual capabilities, including fluent French, make it well suited for working internationally, addressing a key requirement for modern digital infrastructure serving diverse global audiences. The feedback reinforced Redbox's distinctive value as a reliable, capable and ethically-aligned AI assistant purposely designed to empower public sector innovation and decision-making. This visit represents an important step in DBT's AI roadmap. By showcasing tools like Redbox to international partners, we aim to facilitate knowledge exchange and identify areas for collaboration. This will contribute to building reliable, interoperable digital infrastructure to serve the wider European tech community.

The road ahead

While FactVerifAi was built purely for the purposes of the hackathon, we can already see ways we can use it in our pre-existing DBT AI products. For example, the tool could be used to verify the citations and evidence Redbox provides, reducing the likelihood of hallucinations taking place.

Next steps would include refining the AI model's handling of nuanced language. This would improve the user interface for displaying references and exploring multilingual support to serve international audiences.

Building a sovereign digital future

Initiatives like FactVerifAi and Redbox demonstrate DBT's dedication to harnessing AI's potential while prioritising values of reliability, sovereignty, and public trust. As we navigate an increasingly digital landscape, advancing coordination across borders and building our own open tools is not just a technical endeavour but a strategic one. This is a path toward a more reliable and sovereign digital future for Europe.

Want to learn more about DBT's AI successes? Read this blog about how and why DDaT has taken the important step of self-hosting LLMs within a secure, internal environment.

2 comments

Comment by Tanveer Bhatti posted on

One strength of FactVerifAI seems to be that it prevents misinformation from entering an AI model in the first place. This is more effective than trying to fix the model's errors later.

In my role co-chairing the Bank of England’s working group on AI Edge risks, I see a clear opportunity here. We should systematically record every time FactVerifAI fails, especially in difficult situations like ambiguous statements, foreign-language text, or intentionally deceptive questions. This record of failures would be a vital resource for the banking sector to help it prepare for and manage unexpected risks from AI systems.

Comment by Leo posted on

Will there be a published list of the misinformation that VerifiAI has identified as such? If not what is the process of identifying misinformation? How will this process remain accountable? Can information classified as misinformation be brought back into AI models if later identified as information?